Video Tutorial

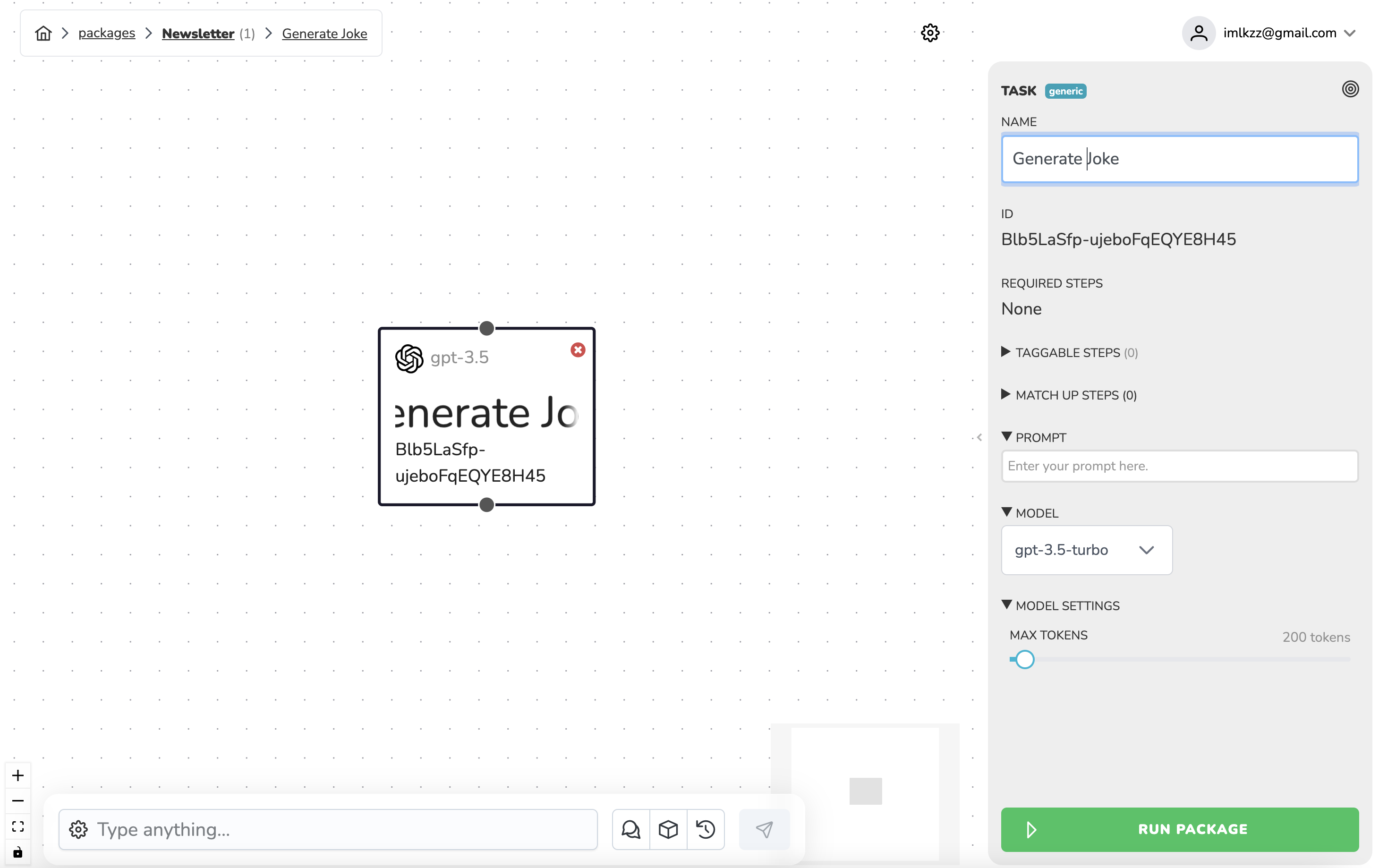

Watch this video to see how to create a simple package using the LLM node.How do I add it to my package?

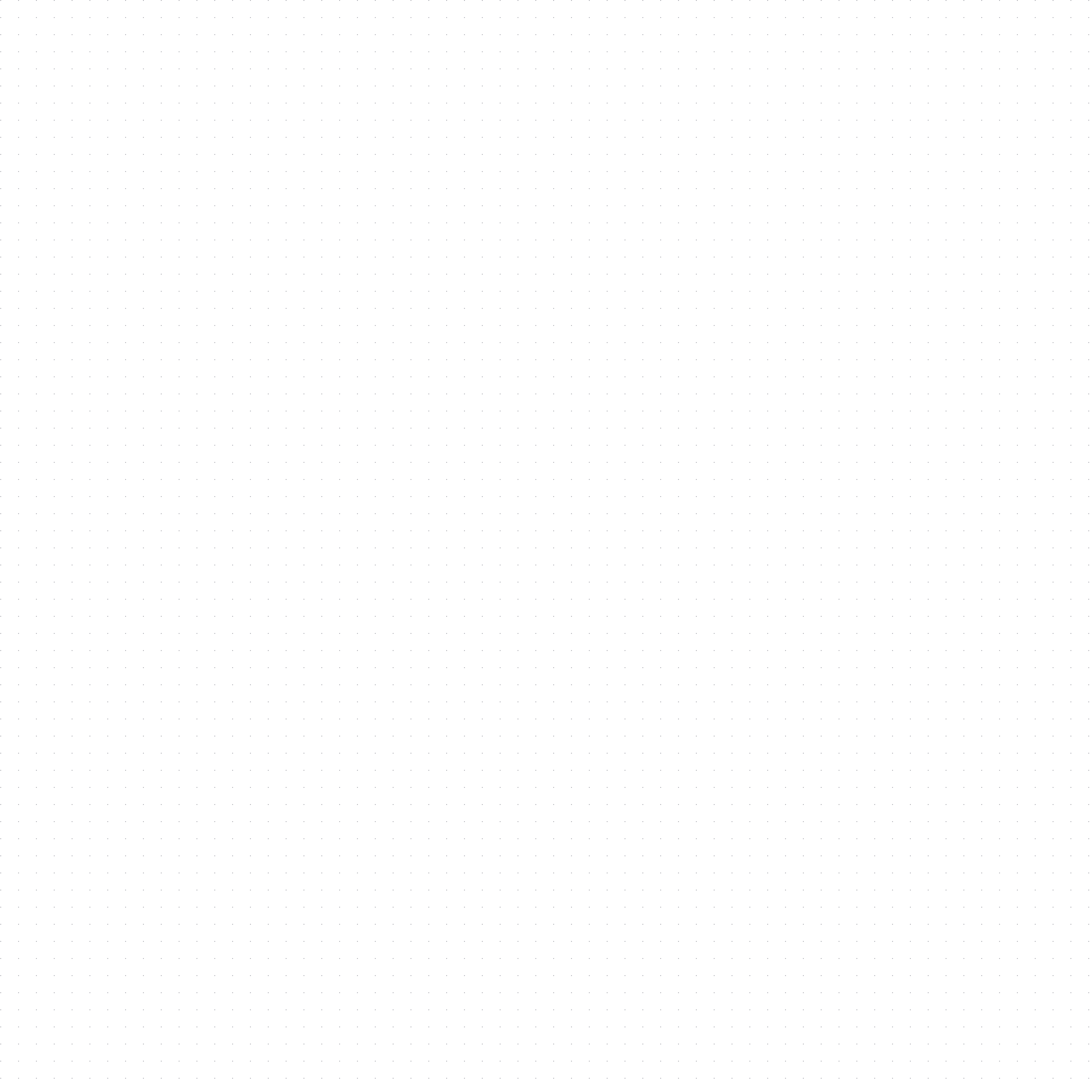

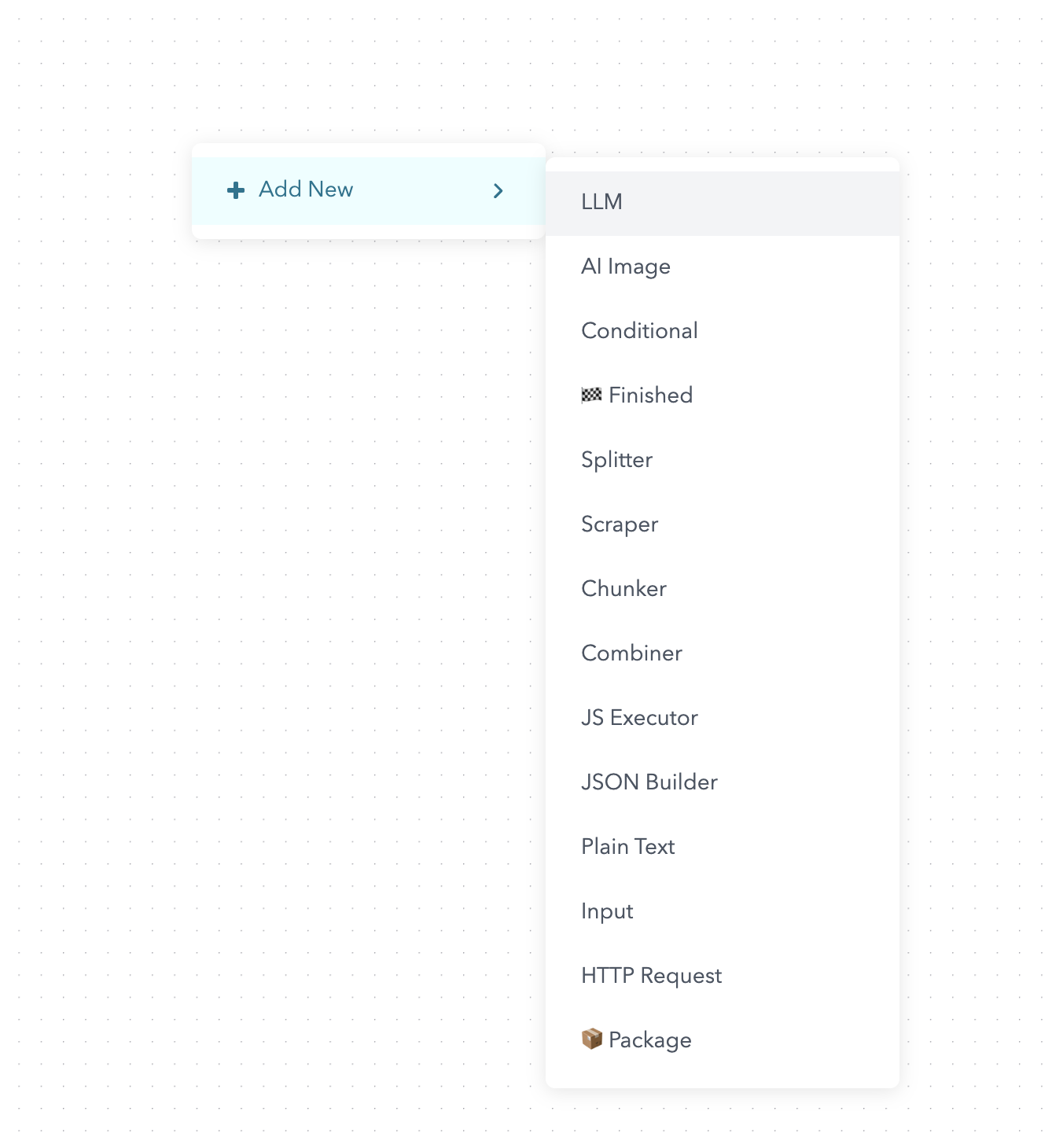

To add the LLM node to your package, right click on the workspace grid and select Add Item.

Configuring the LLM node

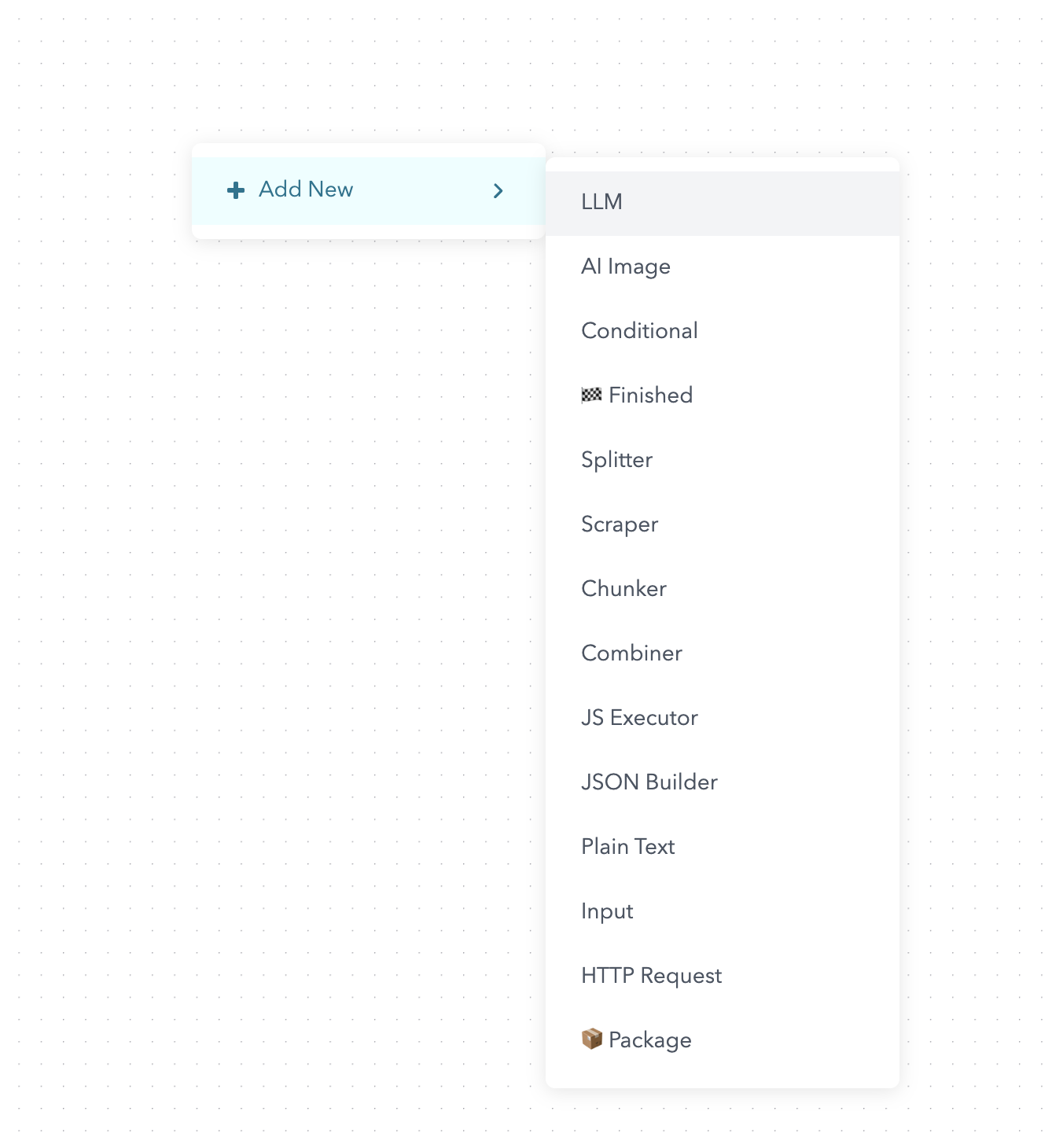

When you first add the LLM node to your package, you will see an Untitled node with gpt-3.5 selected as the model.

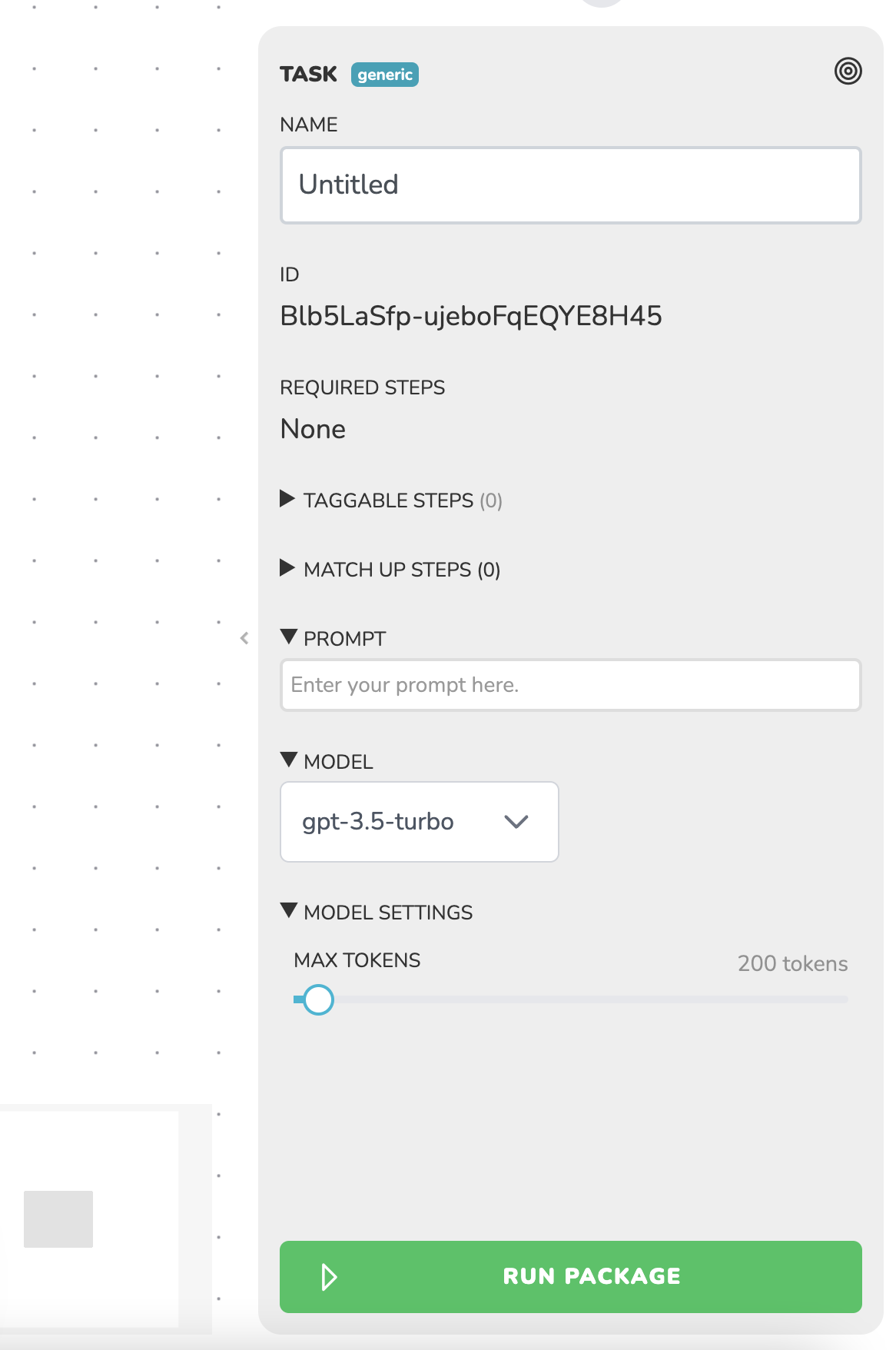

Changing the name of the node

On the task panel on the right hand side of the screen, click on the “Untitled” text in the input field to change the name. As soon as you change the name, it will be saved automatically.

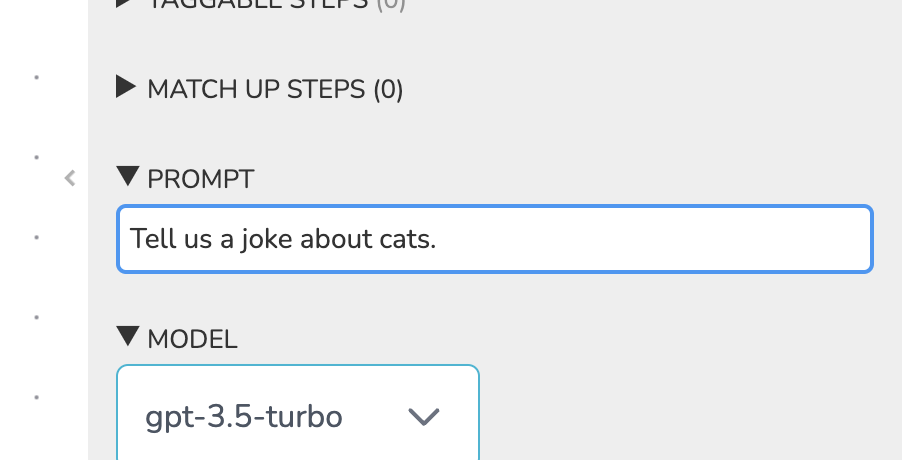

Setting the prompt

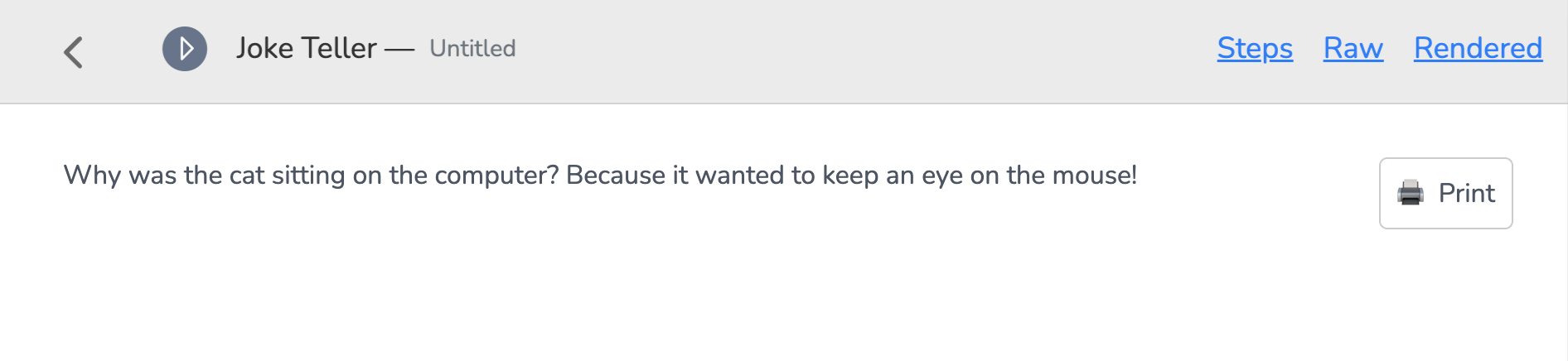

To set the prompt, click on the node in the workspace, and on the right-hand panel, edit the Prompt field. Lets tell GPT to tell us a joke about cats.

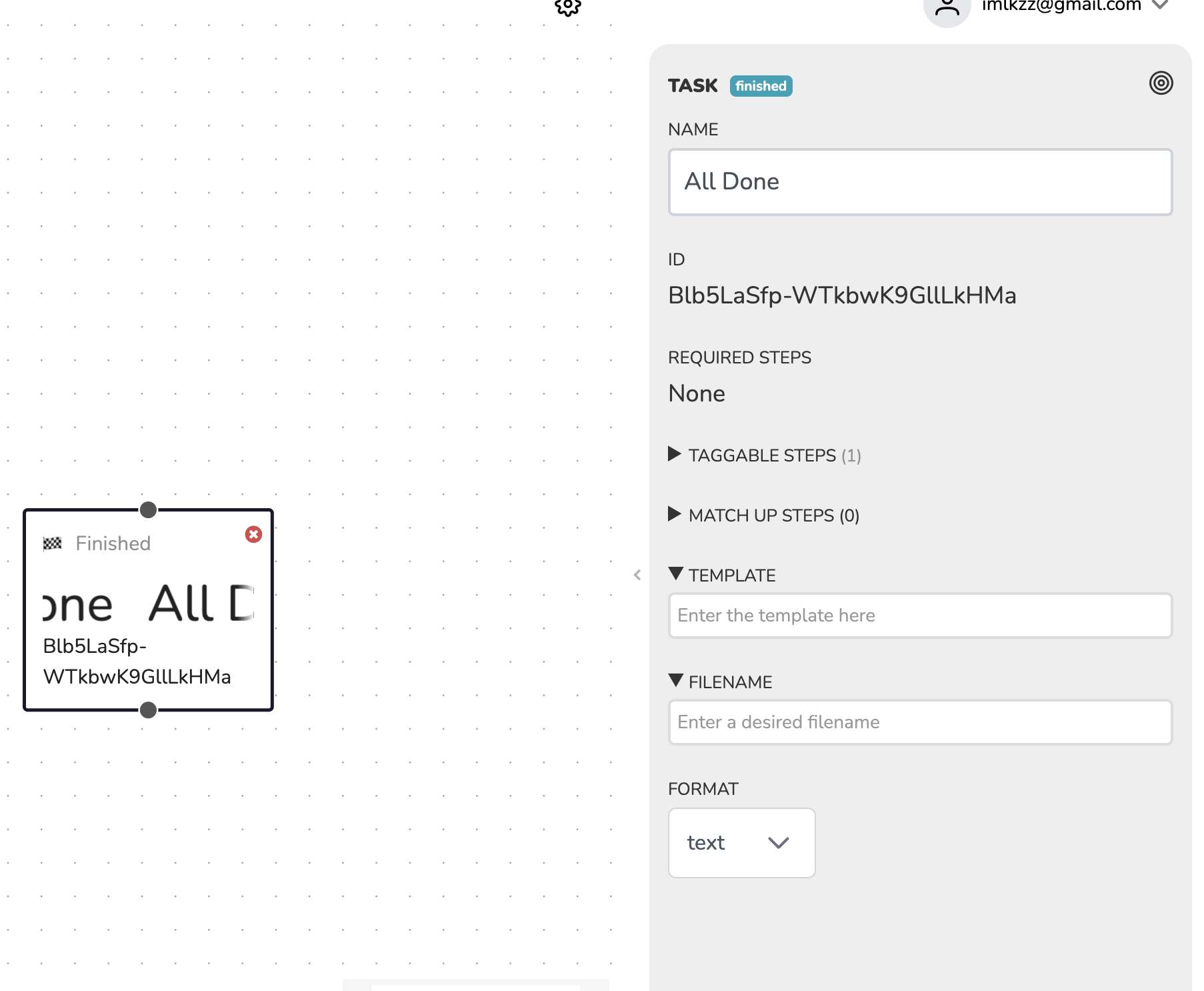

Adding a Finished node

The finished node tells the app how to format the output from all the various nodes within a package. Right click on the workspace, and select Add Item. Then choose 🏁 Finished.

Changing the name

Just like with the LLM node, you can change the name of the finished node by clicking on the Untitled text in the input field on the right-hand panel. For this example, lets change the name of the finished node to All Done.

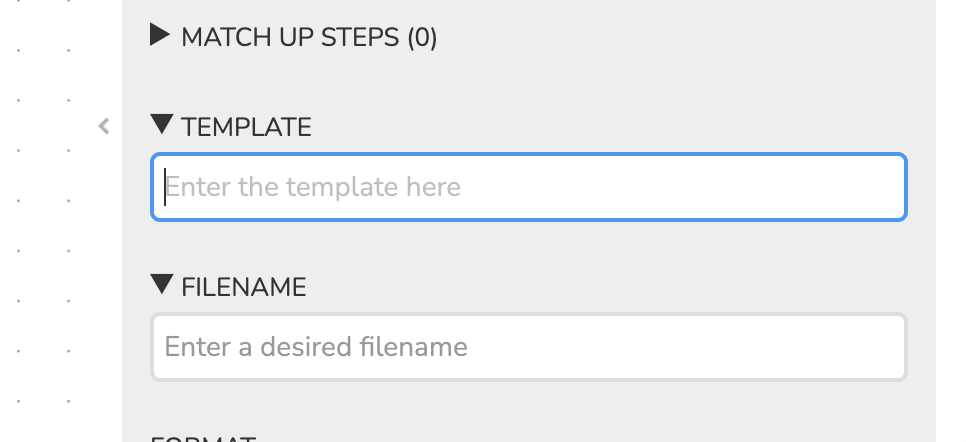

Including the results from the LLM node

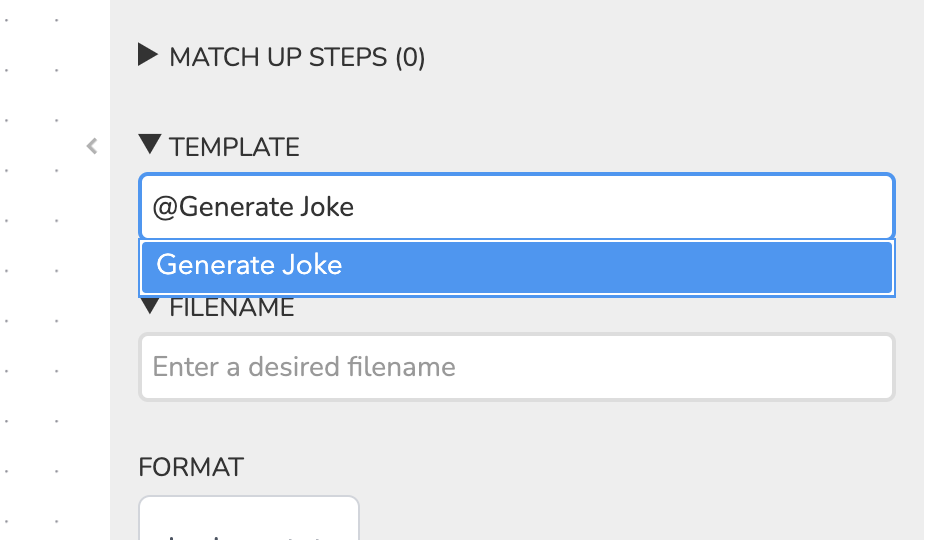

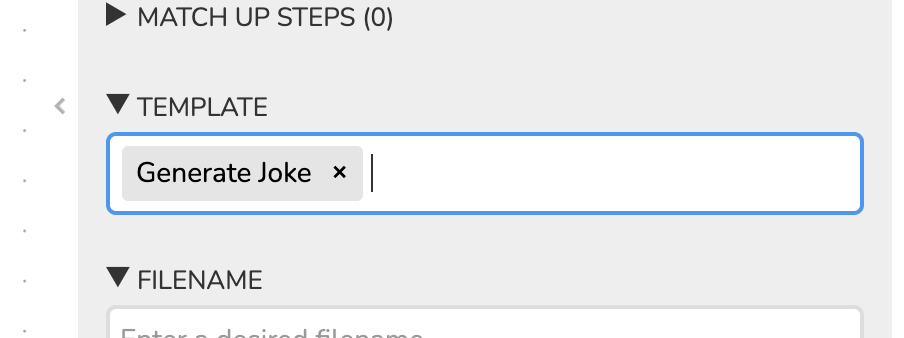

To get the output from our Generate Joke node, we need to ”@ tag” the Generate Joke node in our output. To do that, head to the Template portion of the finished node configuration on the right side of the screen.

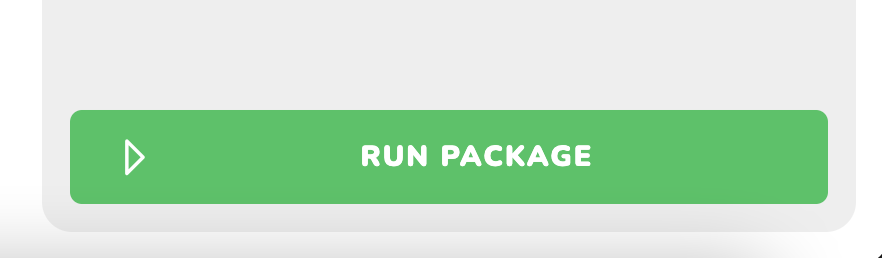

Run the package

Your first package using the LLM node is now ready to run! Hit the run package button to see the output.

Configuration options

Here are all the different configuration options you can use with the LLM node.Prompt

Prompt

The prompt is the text that you provide to the model to generate the output. You can use the prompt to ask questions, provide context, or give instructions to the model.Supports @ tagging

Model

Model

The model option lets you choose which model you want to use to generate the output. You can choose from GPT-3.5, GPT-4o, with more models from other providers coming soon.

Max Tokens

Max Tokens

The max tokens option lets you set the maximum number of tokens that the model can generate. This can be useful if you want to limit the length of the output.